14 Shrinkage Methods

Recall the two methods we’ve used so far to find sets of variable (and their coefficients):

- Choose models using domain knowledge and then applying computational methods (i.e., cross validation) to decide which model is superior. Apply least squares (i.e., calculus) to find the coefficients.

- Adding variables systematically using statistical criteria (F-tests, adjusted \(R^2\), \(C_p\), BIC, AIC). Apply least squares (i.e., calculus) to find the coefficients.

We move to a new model building algorithm that uses all \(p-1\) explanatory variables and constrains the coefficient estimates, or equivalently, shrinks the coefficient estimates toward zero. It turns out that shrinking the coefficients toward zero can substantially reduce the variance of the estimates (albeit adding a bit of bias).

- That is, use mathematical optimization to shrink (set) some of the coefficients to zero (while simultaneously solving for the other non-zero coefficients).

The main reason to use shrinkage techniques is when the model has a lot of predictor variables (\(p\) big) or when the model has a lot of predictor variables in comparison to the number of observations (\(n \approx p\) or \(n < p\)) — because that is when we get huge (infinite!) variability associated with the coefficients!

14.1 Model Complexity / Flexibility

The words complexity and flexibility can be thought of as synonyms. They both refer to how (not) simple a model is. However, their use is slightly different. A polynomial with 20 degrees is very flexible, but might not be described as complex (because it isn’t too hard to write down). A piecewise cubic model with dozens of pieces might not be overly flexible but would be complicated to write down. Or maybe both of the examples just given would be thought of as complex and flexible.

In the models we’ve been discussing, flexibility refers to the number of curves in a “linear” model. The more curve-y (think: polynomial with degree of many dozens), the more the model will (over-)fit the observations.

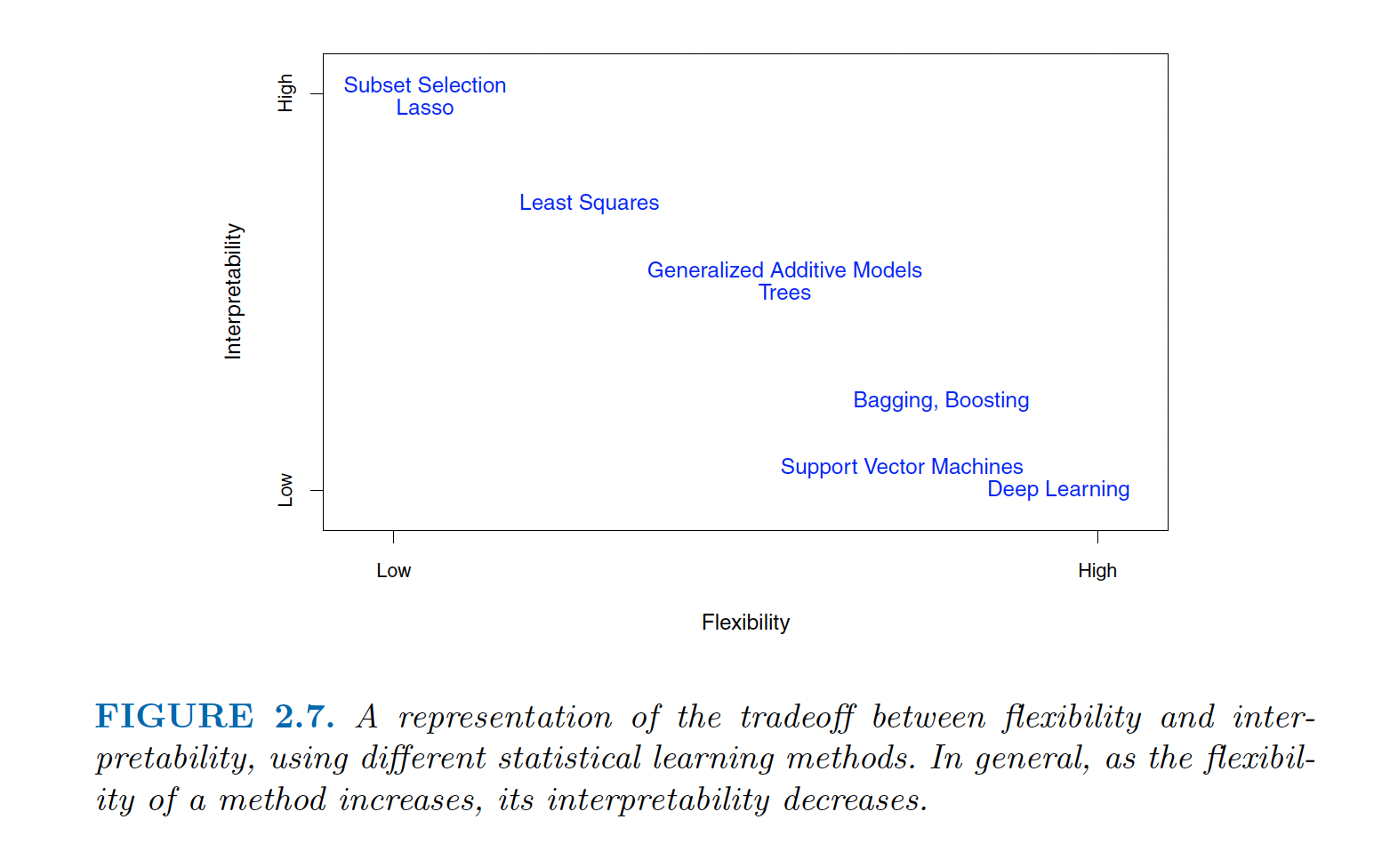

There are many other models, and you can see the flexibility scale of models from this class and of models not covered in this class. In non-linear models, flexibility refers to whether the model is fitting high level functions of the data. Sometimes that means over-fitting. But sometimes a lot of flexibility can capture true but complicated relationships among the variables. Note that flexibility is often at the expense of interpretability.

Note that for Lasso and Ridge Regression, the models are less flexible because they constrain the coefficient estimates. The constraints increase the bias and decrease the variability.

- increases in bias: the constrained coefficients won’t fit the observations as well as OLS. That is, the trend is different from what the data are presenting.

- decrease in variability: the constrained coefficients attenuate to zero which means that a different dataset will also have coefficients close to zero. By forcing the coefficients to be small and closer to one another across different datasets, the predictions will then me more similar (and less variable).

Figure 1.4: Image credit: Figure 2.7 of ISLR

14.2 On Inverting Matrices

\(X_{n \times p}\): The data matrix is \(n \times p\). We think of the data as being \(n\) points in \(p\) dimensions. It is important to realize that not only are the points indexed in \(p\) dimensions, but the points also take up the entire p-dimensional space. [As an aside: Whether a set of points takes up an entire space does not depend on how the points are indexed. For example, think about a (2-dimensional) piece of paper floating in the sky with many many points on it. You might index the coordinates of points on the paper using three dimensions, however, the paper / points themselves actually live in a 2-dimensional subspace.] To reiterate: in an ideal world, the \(n\) points live in \(p\) space and cannot be considered to live in a smaller dimension than \(p\). Sometimes, the \(n\) points are indexed to live in \(p\) space, but actually take up a lower dimensional subspace (e.g., if two of the variable columns are perfectly correlated).

However, there are times when an \(n \times p\) matrix lives in a space which is smaller than \(p\). For example,

- If two of the \(p\) columns are exact linear combinations of one another, then the points will actually live in \(p-1\) space.

- If the number of points is less than \(p\) (\(n < p\)) then the points will only live in \(n\) space. For example, there is no way for two points take up three dimensions!

\(X^t X\): The matrix \(X^tX\) is a linear transformation of the original \(X\) matrix. That is, if \(X\) lives in \(p\) space, it can’t be linearly transformed into a higher dimension. We could transform \(X\) into a higher dimension by using functions or some kind of kernel mapping, but we can’t do it via linear transformations. That is to say, if \(X\) has a rank which is lower than \(p\), any linear combination will also, necessarily, transform the data into a space which is lower than \(p\).

\((X^tX)^{-1}\): The inverse of the matrix also represents a mapping. Recall that if \(AX = Y\) then \(X= A^{-1}Y\). But if we are mapping into a smaller space (smaller than \(p\)) then we can’t invert back to a larger space (i.e., back to \(p\)). And because \((X^tX)^{-1}\) is a \(p \times p\) matrix, we are trying to invert back to a \(p\) dimensional space. Recall the Big Theorem in Linear Algebra that says if a \(p \times p\) matrix has rank lower than \(p\), it isn’t invertible (also that it will have determinant zero, will have some eigenvalues that are zero, etc.)

So… The point is that if \(X\) doesn’t have full rank (that is, if it has dimension less than \(p\)), there will be problems with computing \((X^tX)^{-1}\). And the matrix \((X^tX)^{-1}\) is vitally important in computing both the least squares coefficients and their standard errors.

14.3 Ridge Regression

An excellent discussion of Ridge Regression is given by Wieringen (2021).

Recall that the OLS (ordinary least squares) technique minimizes the distance between the observed and predicted values of the response. That is, we found the \(b_0, b_1, \ldots b_{p-1}\) that minimize: \[SSE = \sum_{i=1}^n \bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \bigg)^2.\]

Using the OLS algorithm for modeling, the values of \(b_i\) give the exact same model as the model built using the standardized variables which produce \(b_i^*\). However, as you will see, it is important to standardize all variables before running ridge regression.

For ease of computation, we will assume from here on that the variables have all been standardized as described above (in a previous section). [Note: ISL describes standardizing by only dividing by the standard deviation and not centering. The two different methods will not produce different models (with respect to significance, etc.), but they will produce different intercept coefficients. Indeed, scale is the important aspect to consider when working with shrinkage models.]

The ridge regression coefficients are calculated in a similar way to OLS, but the optimization equation seeks to minimize:

\[\sum_{i=1}^n \bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \bigg)^2 + \lambda \sum_{j=1}^{p-1} b_j^2 = SSE + \lambda \sum_{j=1}^{p-1} b_j^2\] where \(\lambda \geq 0\) is a tuning parameter, to be determined separately. The ridge regression optimization provides a trade-off between two different criteria: variance and bias. As with OLS, ridge regression seeks to find coefficients that fit the data well (minimize SSE!). Additionally, ridge regression shrinks the coefficients to zero by adding a penalty so that smaller values of \(b_j\) are preferred - the shrinkage makes the estimates less variable. It can be proved that there is always a \(\lambda \ne 0\) which gives smaller E[MSE] than OLS. Note: the shrinkage does not apply to the intercept!

Note: the Gauss-Markov theorem says that OLS solutions are the best (i.e., smallest variance) linear unbiased estimates (BLUE). But if we add a bit of bias, we can do better. There is an existence theorem (Theobald 1974) that says: \[ \exists \ \ \lambda \mbox{ such that } E[MSE_{RR}] < E[MSE_{OLS}].\] Excellent description of the theory is given by Wieringen (2021).

14.3.1 The Ridge Regression Solution

\[\begin{eqnarray*} \mbox{OLS: } \underline{b} &=& (X^t X)^{-1} X^t \underline{Y}\\ \mbox{ridge regression: } \underline{b} &=& (X^t X + \lambda \mathtt{I})^{-1} X^t \underline{Y}\\ \end{eqnarray*}\]

Notes:

- The tuning parameter \(\lambda\) balances the effect of the two different criteria in the optimization equation. When \(\lambda=0\), ridge regression is reduced to OLS. As \(\lambda \rightarrow \infty\), the coefficient estimates will shrink to zero.

- We have assumed that the variables have been centered to have mean zero before ridge regression is performed. Therefore, the estimated intercept will be \[b_0 = \overline{Y} = \frac{1}{n} \sum_i Y_i \ \ \ \ \ \ (= 0 \mbox{ if $Y$ is also centered}) \]

- Note that the ridge regression optimization above is a constrained optimization equation, solved by Lagrange multipliers. That is, we minimize \(SSE = \sum_{i=1}^n \bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \bigg)^2\) subject to \(\sum_{j=1}^{p-1} b_j^2 \leq s\) for some value of \(s\). Note that \(s\) is inversely related to \(\lambda\).

14.3.2 Ridge Regression visually

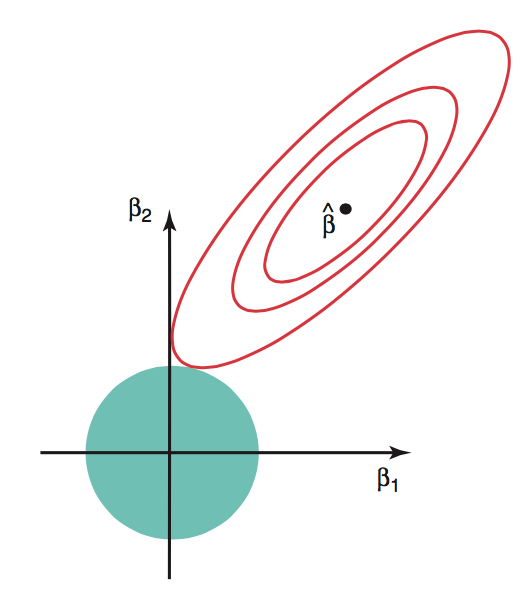

Ridge regression is typically applied to situations with many many variables. In particular, ridge regression will help us avoid situations of multicollinearity. It doesn’t make sense to apply it to a situation with only one or two variables. However, we will demonstrate the process visually with \(p=3\) dimensions because it is difficult to visualize in higher dimensions.

The ridge regression coefficient estimates solve the following optimization problem:

\[ \min_\beta \Bigg\{ \sum_{i=1}^n \Bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \Bigg)^2 \Bigg\} \mbox{ subject to } \sum_{j=1}^{p-1} b_j^2 \leq s\]

Figure 14.1: The red contours represent pairs of \(\beta\) coefficients that produce constant values for SSE on the data. The blue circle represents the possible values of \(\beta\) given the constraint (that the squared magnitude is less than some cutoff). Image credit: ISLR

14.3.3 Why Ridge Regression?

Recall that the main benefit to ridge regression is when \(p\) is large (particularly in relation to \(n\)). Remember that this (and multicollinearity) leads to instability of \((X^tX)^{-1}\). Which leads to huge variability in the coefficient estimates. A small change in the model or the observations can create wildly different estimates. So the expected value of the MSE can be quite inflated due to the variability of the model. Ridge regression adds a small amount of bias to the model, but it lowers the variance substantially and creates lower (on average) MSE values.

Additionally, ridge regression has computational advantages over the subset selection models (where all subsets requires searching through \(2^{p-1}\) models).

Why is ridge regression better than least squares?

The advantage is apparent in the bias-variance trade-off. As \(\lambda\) increases, the flexibility of the ridge regression fit decreases. This leads to decrease variance, with a smaller increase in bias. Regular OLS regression is fixed with high variance, but no bias. However, the lowest test MSE tends to occur at a balance between variance and bias. Thus, by properly tuning \(\lambda\) and acquiring less variance at the cost of a small amount of bias, we can find a lower potential MSE.

Ridge regression works best in situations for least squares estimates have high variance. Ridge regression is also much more computationally efficient that any subset method, since it is possible to simultaneously solve for all values of \(\lambda\).

14.3.4 Inference on Ridge Regression Coefficients

Note that just like the OLS coefficients, the RR coefficients are a linear combination of the \(Y\) values based on the \(X\) matrix and (now) \(\lambda\). It is not hard, therefore, to find the variance of the coefficient vector at a particular value of \(\lambda\). Additionally, the same theory that gives normality (and the resulting t-statistics) drives normality for the ridge regression coefficients.

\[var(b^{RR}) = \sigma^2 W X^t X W \mbox{ where } W = (X^t X + \lambda \mathtt{I})^{-1}\]

However, all the distributional properties above give theoretical results for a fixed value of \(\lambda\). We now discuss estimating \(\lambda\), but as soon as we estimate \(\lambda\), it becomes dependent on the data and thus a random variable. That is, the SE of \(b^{RR}\) is a function of not only the variability associated with the data estimating the coefficients but also the variability of the data estimating \(\lambda\).

An additional problem is that the RR coefficients are known to be biased, and the bias is not easy to estimate. Without a sense of where the variable is centered, the SE isn’t particularly meaningful. For these reasons, functions like lm.ridge() in R do not include tests / p-values but do approximate the SE of the coefficients (as an estimate).

14.4 How do you choose \(\lambda\)?

Note that \(\lambda\) is a function of the data, and therefore a random variable to estimate (just like estimating the coefficients). However, we can use diagnostic measures to give a sense of \(\lambda\) values which will give a good variance-bias trade-off.

- Split data into test and training, and plot test MSE as a function of \(\lambda\).

- Actually cross validate the data (remove test samples in groups of, say 1/10, to see which \(\lambda\) gives the best predictions on “new” data) and find \(\lambda\) which gives the smallest MSE for the cross validated data.

Cross Validating to Find \(\lambda\)

- Set \(\lambda\) (e.g., try \(\lambda\) between \(10^{-2}\) and \(10^{5}\):

lambda.grid = 10^seq(5,-2, length =100))- Remove 1/10 of the observations (partition the data into 10 groups).

- Find the RR / Lasso model using the remaining 90% of the observations and the given value of \(\lambda\).

- Predict the response value for the removed points given the 90% training values.

- Repeat (a) - (c) until every point has been predicted as a test value.

- Using the CV predictions, find \(MSE_\lambda\) for the \(\lambda\) at hand.

- Repeat steps 1 and 2 across the grid of \(\lambda\) values.

- Choose the \(\lambda\) value that minimizes the CV \(MSE_\lambda\).

14.5 Lasso

Ridge regression had at least one disadvantage; it includes all \(p\) predictors in the final model. The penalty term will set many of them close to zero, but never exactly to zero. This isn’t generally a problem for prediction accuracy, but it can make the model more difficult to interpret the results. Lasso overcomes this disadvantage and is capable of forcing some of the coefficients to zero granted that \(\lambda\) is big enough. Since \(\lambda = 0\) results in regular OLS regression, as \(\lambda\) approaches \(\infty\) the coefficients shrink towards zero.

14.5.1 Lasso Coefficients

The lasso (least absolute shrinkage and selection operator) coefficients are calculated from a similar constraint to that of ridge regression, but the calculus is much harder now. The L-1 norm is the only norm that gives sparsity and is convex (so that the optimization problem can be solved). The lasso optimization equation seeks to minimize:

\[\sum_{i=1}^n \bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \bigg)^2 + \lambda \sum_{j=1}^{p-1} |b_j| = SSE + \lambda \sum_{j=1}^{p-1} |b_j|\] where \(\lambda \geq 0\) is a tuning parameter, to be determined separately. As with ridge regression lasso optimization provides a trade-off between bias and variance. Lasso seeks to find coefficients that fit the data well (minimize SSE!). Additionally, lasso shrinks the coefficients to zero by adding a penalty so that smaller values of \(b_j\) are preferred. Note: the shrinkage does not apply to the intercept! The minimization quantities for ridge regression and lasso are extremely similar: ridge regression constrains the sum of the squared coefficients; lasso constrains the sum of the absolute coefficients. [As with ridge regression, we use standardized variables in modeling.]

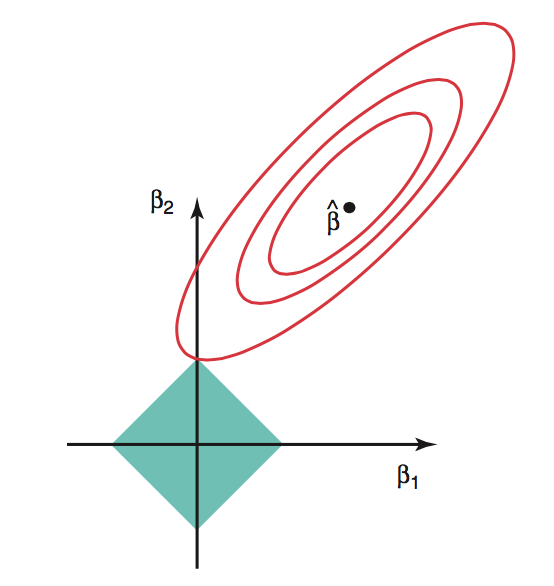

14.5.2 Lasso visually

As with ridge regression Lasso is also typically applied to situations with many many variables (also to avoid multicollinearity). It doesn’t make sense to apply it to a situation with only one or two variables. However, we will demonstrate the process visually with p=3 dimensions because it is difficult to visualize in higher dimensions. Notice here that there is a very good chance for the red contours to hit the turquoise diamond at a corner (producing some coefficients to be estimated as zero). The corner effect becomes more extreme in higher dimensions.

The lasso coefficient estimates solve the following optimization problem:

\[ \min_\beta \Bigg\{ \sum_{i=1}^n \Bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \Bigg)^2 \Bigg\} \mbox{ subject to } \sum_{j=1}^{p-1} |b_j| \leq s\]

Figure 1.5: The red contours represent pairs of \(\beta\) coefficients that produce constant values for SSE on the data. The blue diamond represents the possible values of \(\beta\) given the constraint (that the absolute magnitude is less than some cutoff). Image credit: ISLR

The key to lasso (in contrast to ridge regression) is that it does variable selection by shrinking the coefficients all the way to zero. We say that the lasso yields sparse models - that is, only a subset of the original variables will be retained in the final model.

14.5.3 How do you choose \(\lambda\)?

Note that \(\lambda\) is a function of the data, and therefore a random variable to estimate (just like estimating the coefficients). However, we can use diagnostic measures to give a sense of \(\lambda\) values which will give a good variance-bias trade-off.

- Split data into test and training, and plot test MSE as a function of \(\lambda\).

- Actually cross validate the data (remove test samples in groups of, say 1/10, to see which \(\lambda\) gives the best predictions on “new” data) and find \(\lambda\) which gives the smallest MSE for the cross validated data.

14.6 Ridge Regression vs. Lasso

Quote from An Introduction to Statistical Learning, V2, page 246.

These two examples illustrate that neither ridge regression nor the lasso will universally dominate the other. In general, one might expect the lasso to perform better in a setting where a relatively small number of predictors have substantial coefficients, and the remaining predictors have coefficients that are very small or that equal zero. Ridge regression will perform better when the response is a function of many predictors, all with coefficients of roughly equal size. However, the number of predictors that is related to the response is never known a priori for real data sets. A technique such as cross-validation can be used in order to determine which approach is better on a particular data set.

As with ridge regression, when the least squares estimates have excessively high variance, the lasso solution can yield a reduction in variance at the expense of a small increase in bias, and consequently can generate more accurate predictions. Unlike ridge regression, the lasso performs variable selection, and hence results in models that are easier to interpret.

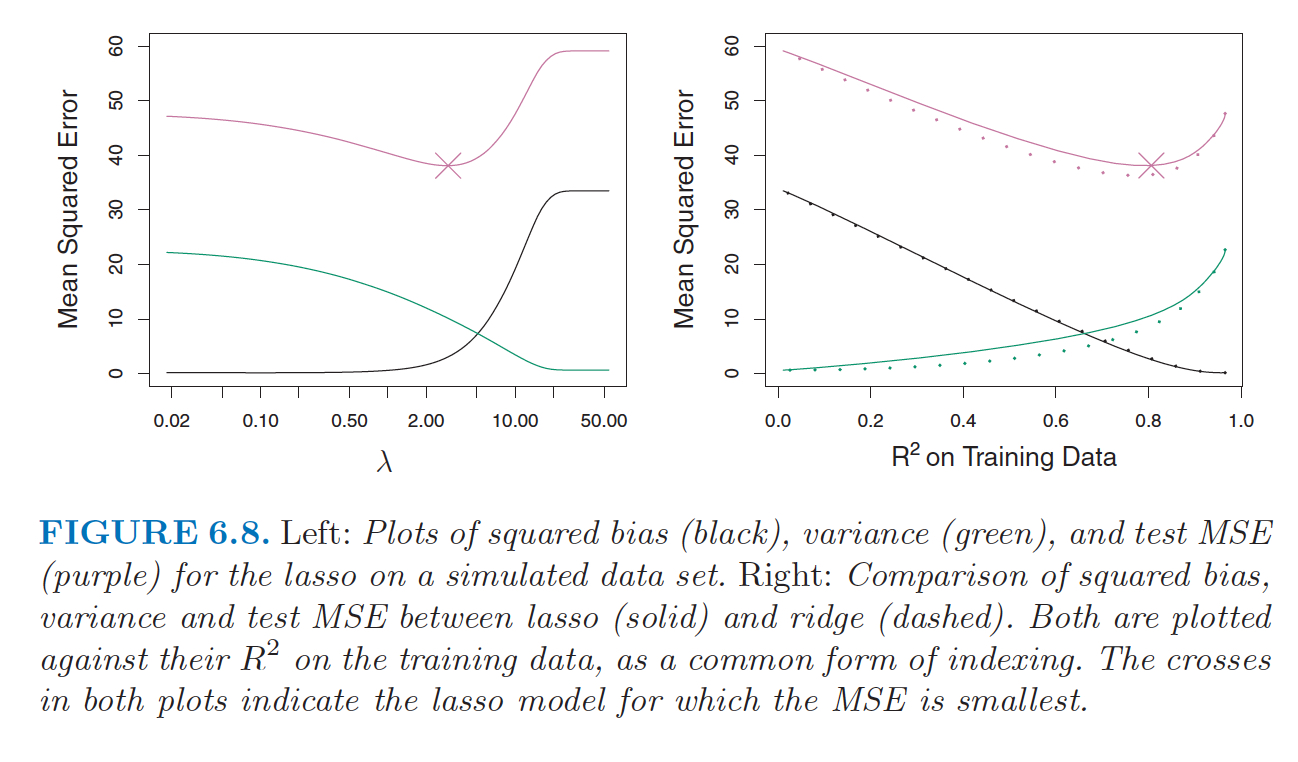

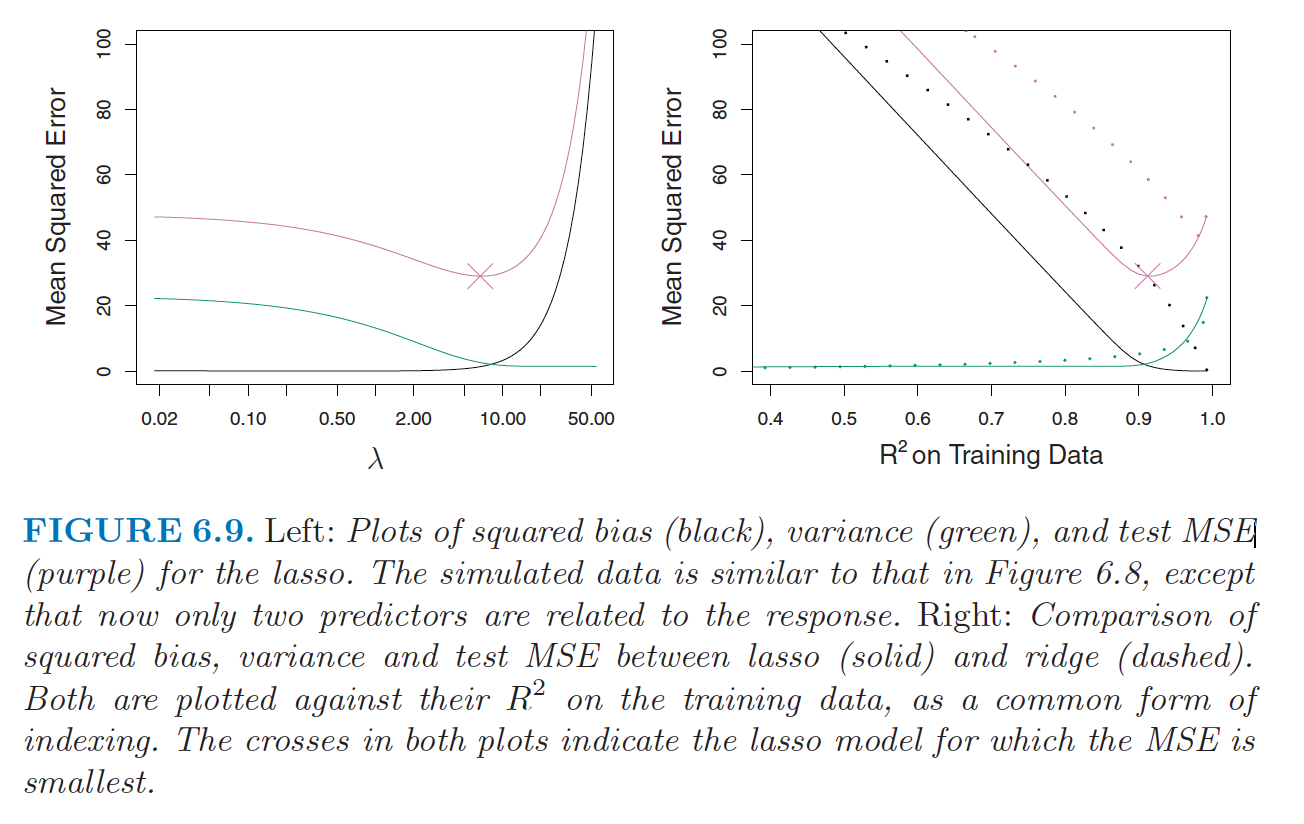

Figure 14.2: From ISLR, pgs 245-246. The data in Figure 6.8 were generated in such a way that all 45 predictors were related to the response. That is, none of the true coefficients beta1,… , beta45 equaled zero. The lasso implicitly assumes that a number of the coefficients truly equal zero. Consequently, it is not surprising that ridge regression outperforms the lasso in terms of prediction error in this setting. Figure 6.9 illustrates a similar situation, except that now the response is a function of only 2 out of 45 predictors. Now the lasso tends to outperform ridge regression in terms of bias, variance, and MSE.

Figure 14.3: From ISLR, pgs 245-246. The data in Figure 6.8 were generated in such a way that all 45 predictors were related to the response. That is, none of the true coefficients beta1,… , beta45 equaled zero. The lasso implicitly assumes that a number of the coefficients truly equal zero. Consequently, it is not surprising that ridge regression outperforms the lasso in terms of prediction error in this setting. Figure 6.9 illustrates a similar situation, except that now the response is a function of only 2 out of 45 predictors. Now the lasso tends to outperform ridge regression in terms of bias, variance, and MSE.

Trevor Hastie, Tibshirani, and Tibshirani (2020) comment on the theoretical literature as well as perform extensive simulations to compare best subsets, forward stepwise, and the Lasso. The paper is particularly interesting in their comparison of best subsets and Lasso.

Generally speaking, the lasso and best subset selection differ in terms of their “aggressiveness” in selecting and estimating the coefficients in a linear model, with the lasso being less aggressive than best subset selection; meanwhile, forward stepwise lands somewhere in the middle, in terms of its aggressiveness.

The paper summary is given as:

- neither best subset nor the lasso uniformly dominate the other, with best subset generally performing better in very high signal-to-noise (SNR) ratio regimes, and the lasso better in low SNR regimes;

- for a large proportion of the settings considered, best subset and forward stepwise perform similarly, but in certain cases in the high SNR regime, best subset performs better;

- forward stepwise and best subsets tend to yield sparser models (when tuned on a validation set), especially in the high SNR regime;

- the relaxed lasso (actually, a simplified version of the original relaxed estimator defined in Meinshausen (Comput. Statist. Data Anal. 52 (2007) 374–393)) is the overall winner, performing just about as well as the lasso in low SNR scenarios, and nearly as well as best subset in high SNR scenarios.

14.7 Elastic Net

It is also possible to combine ridge regression and lasso through what is called elastic net regularization. (The R package glmnet allows for the combined elastic net model.) The main idea is that the optimization contains both L-1 and L-2 penalties. The model may produce more stable estimates of the coefficients but requires and additional tuning parameter to estimate. That is, find the coefficients that minimize:

\[\sum_{i=1}^n \bigg( Y_i - b_0 - \sum_{j=1}^{p-1} b_j X_{ij} \bigg)^2 + \lambda \bigg[(1-\alpha)(\frac{1}{2})\sum_{j=1}^{p-1} b_j^2 + \alpha \sum_{j=1}^{p-1} |b_j| \bigg].\]

14.8 Reflection Questions

- How do ridge regression coefficient estimates differ from OLS estimates? How are they similar?

- How do Lasso coefficient estimates differ from OLS estimates? How are they similar?

- What is the difference between ridge regression and Lasso?

- What are some of the ways to find a good \(\lambda\) for ridge regression?

- Why does the 1-norm regularization yield “sparse” solutions? (What does “sparse” mean?)

- Give two different situations when ridge regression or Lasso are particularly appropriate.

14.10 R: Ridge Regression

The Data

The following dataset is from TidyTuesday. Again, we explore the information from The Office. The analysis here is taken from Julia Silge’s blog. She does a bit of data wrangling that I’m going to hide. Look through her blog, or see the source code for this bookdown file.

The dataset we will be working with has imdb_rating as the response variable. The predictor (explanatory) variables are: season, episode and 28 columns representing the number of lines of a particular character.

office## # A tibble: 136 × 32

## season episode episode_name andy angela darryl dwight jim kelly kevin

## <dbl> <dbl> <chr> <int> <int> <int> <int> <int> <int> <int>

## 1 1 1 pilot 0 1 0 29 36 0 1

## 2 1 2 diversity day 0 4 0 17 25 2 8

## 3 1 3 health care 0 5 0 62 42 0 6

## 4 1 5 basketball 0 3 15 25 21 0 1

## 5 1 6 hot girl 0 3 0 28 55 0 5

## 6 2 1 dundies 0 1 1 32 32 7 1

## 7 2 2 sexual harassment 0 2 9 11 16 0 6

## 8 2 3 office olympics 0 6 0 55 55 0 9

## 9 2 4 fire 0 17 0 65 51 4 5

## 10 2 5 halloween 0 13 0 33 30 3 2

## # … with 126 more rows, and 22 more variables: michael <int>, oscar <int>,

## # pam <int>, phyllis <int>, ryan <int>, toby <int>, erin <int>, jan <int>,

## # ken_kwapis <dbl>, greg_daniels <dbl>, b_j_novak <dbl>,

## # paul_lieberstein <dbl>, mindy_kaling <dbl>, paul_feig <dbl>,

## # gene_stupnitsky <dbl>, lee_eisenberg <dbl>, jennifer_celotta <dbl>,

## # randall_einhorn <dbl>, brent_forrester <dbl>, jeffrey_blitz <dbl>,

## # justin_spitzer <dbl>, imdb_rating <dbl>

set.seed(47)

office_split <- initial_split(office, strata = season)

office_train <- training(office_split)

office_test <- testing(office_split)The full linear model:

office_lm <- office_train %>%

select(-episode_name) %>%

lm(imdb_rating ~ ., data = .)

office_lm %>% tidy()## # A tibble: 31 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 7.19 0.315 22.8 3.83e-34

## 2 season -0.00222 0.0366 -0.0607 9.52e- 1

## 3 episode 0.0145 0.00730 1.98 5.15e- 2

## 4 andy 0.00215 0.00424 0.507 6.14e- 1

## 5 angela 0.00307 0.00865 0.354 7.24e- 1

## 6 darryl 0.000932 0.00783 0.119 9.06e- 1

## 7 dwight -0.00172 0.00380 -0.452 6.53e- 1

## 8 jim 0.00541 0.00375 1.44 1.54e- 1

## 9 kelly -0.0129 0.0101 -1.28 2.05e- 1

## 10 kevin 0.00279 0.0114 0.244 8.08e- 1

## # … with 21 more rowsWhat if \(n\) is really small?

No good! The coefficients cannot be estimated! (Here, 5 points were randomly selected, remember \(p = 31\).) The model breaks down because \((X^t X)^{-1}\) is not invertible. Notice that only 5 coefficients are estimated and no SEs are estimated.

set.seed(47)

office_train %>%

select(-episode_name) %>%

slice_sample(n = 5) %>%

lm(imdb_rating ~ ., data = .) %>%

tidy()## # A tibble: 31 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 7.30 NaN NaN NaN

## 2 season 0.228 NaN NaN NaN

## 3 episode 0.0264 NaN NaN NaN

## 4 andy -0.0197 NaN NaN NaN

## 5 angela 0.0499 NaN NaN NaN

## 6 darryl NA NA NA NA

## 7 dwight NA NA NA NA

## 8 jim NA NA NA NA

## 9 kelly NA NA NA NA

## 10 kevin NA NA NA NA

## # … with 21 more rowsRidge Regression model building

The vignette for glmnet is at https://cran.r-project.org/web/packages/glmnet/vignettes/glmnet_beta.pdf and is very useful. glmnet will be used as the engine in the tidymodels process.

By using a generalized linear_reg() function, mixture = 0 specifies ridge regression and mixture = 1 specifies Lasso regularization. The mixture parameter can be any number between zero and one (and then represents a model referred to as Elastic Net regularization). penalty will be a tuning parameter that is set later.

Recipe

First, a recipe needs to be specified. Note that the episode_name is not a predictor variable, and all the variables need to be normalized (particularly important to scale the variables).

Specify the engine + fit

If we set the penalty to be 47, the ridge regression has a straightforward (linear algebra) solution. The coefficients are given for the ridge regression model (and are substantially smaller than the linear regression coefficients, as expected).

Side note relevant to the code but not to the main ideas of the ridge regression.

The constraint part can be specified in two different ways. (Can also be specified as \(\sum_{j=1}^{p-1}b_j^2 \leq c\) for some \(c\), but we won’t use that construction here.)

Set

penalty = Pin: \[\min(SSE + P)\]Set \(\lambda\) in: \[\min(SSE + \lambda\sum_{j=1}^{p-1}b_{j}^2)\]

If P=0 then \(\lambda\) will also be zero (and the estimates will be the same as those from OLS). Additionally, P and \(\lambda\) will be monotonically related. That is, a large penalty corresponds to a large value of \(\lambda.\) That said, they are not functions of one another.

ridge_spec <- linear_reg(mixture = 0, penalty = 47) %>%

set_mode("regression") %>%

set_engine("glmnet")

ridge_wf <- workflow() %>%

add_recipe(office_rec)

ridge_fit <- ridge_wf %>%

add_model(ridge_spec) %>%

fit(data = office_train)It turns out that glmnet fits the model for all values of penalty at once, so we can see the coefficients for any other value of penalty of interest. Notice that the coefficients are smaller for larger values of the penalty.

## # A tibble: 31 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) 8.36 47

## 2 season -0.00110 47

## 3 episode 0.00107 47

## 4 andy -0.000546 47

## 5 angela 0.00106 47

## 6 darryl 0.000434 47

## 7 dwight 0.000952 47

## 8 jim 0.00150 47

## 9 kelly 0.000112 47

## 10 kevin 0.000600 47

## # … with 21 more rows## # A tibble: 31 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) 8.36 0

## 2 season -0.0114 0

## 3 episode 0.107 0

## 4 andy 0.0351 0

## 5 angela 0.0235 0

## 6 darryl 0.0103 0

## 7 dwight -0.0200 0

## 8 jim 0.0895 0

## 9 kelly -0.0700 0

## 10 kevin 0.0129 0

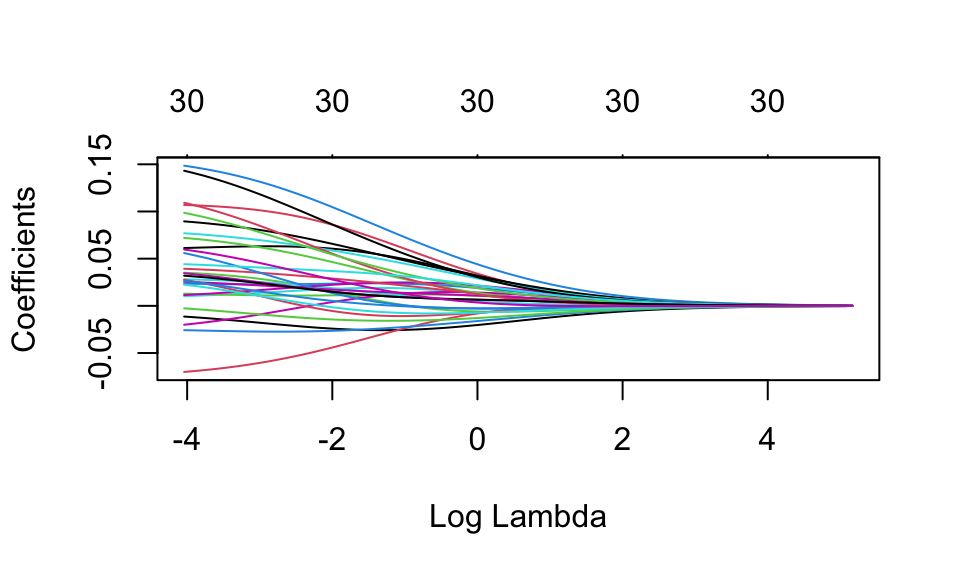

## # … with 21 more rowsWe can visualize how the magnitude of the coefficients are regularized toward zero as the penalty goes up. (We won’t get into the relationship between \(\lambda\) and penalty, they both penalize the magnitude of the coefficients, penalty can be specified per variable.)

Prediction is done like other linear models. So if predict() is used with no other parameters, it will use penalty = 47 as specified above:

predict(ridge_fit, new_data = office_train)## # A tibble: 100 × 1

## .pred

## <dbl>

## 1 8.36

## 2 8.36

## 3 8.36

## 4 8.36

## 5 8.36

## 6 8.36

## 7 8.36

## 8 8.36

## 9 8.36

## 10 8.36

## # … with 90 more rows14.10.0.1 Normalizing + Tuning + Whole Process

To tune the lambda parameter, we need to start over with the model/engine specification so that the penalty is not fixed.

ridge_spec_tune <- linear_reg(mixture = 0, penalty = tune()) %>%

set_mode("regression") %>%

set_engine("glmnet")Next, a new workflow() which includes the new model/engine specification. Notice that we don’t need to set measure = 0 here because it was only the penalty term which we designated to tune().

set.seed(1234)

office_fold <- vfold_cv(office_train, strata = season)

ridge_grid <- grid_regular(penalty(range = c(-5, 5)), levels = 50)

ridge_wf <- workflow() %>%

add_recipe(office_rec)

ridge_fit <- ridge_wf %>%

add_model(ridge_spec_tune) %>%

fit(data = office_train)

# this is the line that tunes the model using cross validation

set.seed(2020)

ridge_cv <- tune_grid(

ridge_wf %>% add_model(ridge_spec_tune),

resamples = office_fold,

grid = ridge_grid

)## # A tibble: 50 × 7

## penalty .metric .estimator mean n std_err .config

## <dbl> <chr> <chr> <dbl> <int> <dbl> <chr>

## 1 0.791 rmse standard 0.465 10 0.0369 Preprocessor1_Model25

## 2 0.494 rmse standard 0.466 10 0.0385 Preprocessor1_Model24

## 3 1.26 rmse standard 0.467 10 0.0357 Preprocessor1_Model26

## 4 0.309 rmse standard 0.470 10 0.0406 Preprocessor1_Model23

## 5 2.02 rmse standard 0.472 10 0.0349 Preprocessor1_Model27

## 6 0.193 rmse standard 0.476 10 0.0427 Preprocessor1_Model22

## 7 3.24 rmse standard 0.477 10 0.0344 Preprocessor1_Model28

## 8 5.18 rmse standard 0.483 10 0.0341 Preprocessor1_Model29

## 9 0.121 rmse standard 0.484 10 0.0447 Preprocessor1_Model21

## 10 8.29 rmse standard 0.488 10 0.0339 Preprocessor1_Model30

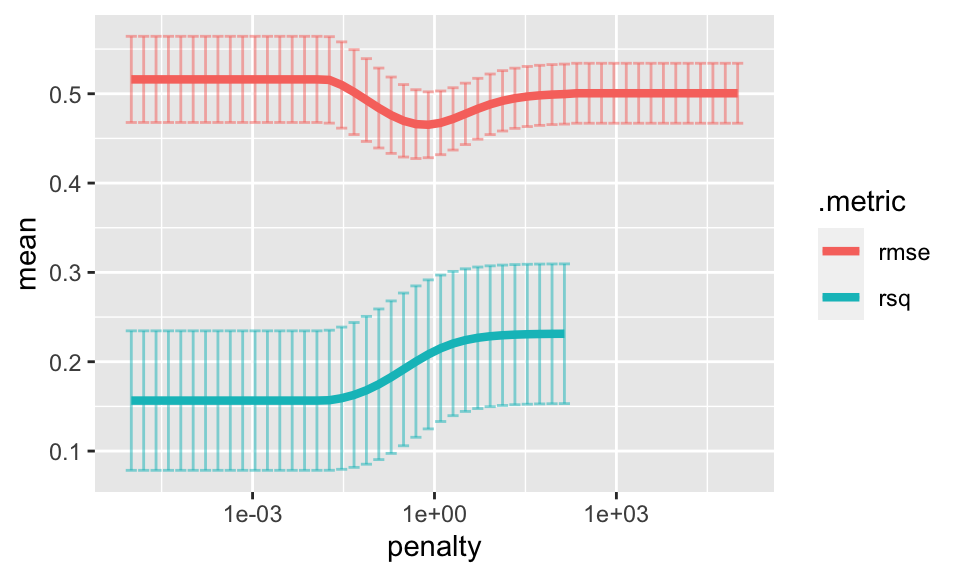

## # … with 40 more rowsInterestingly, as the penalty grows, the coefficients all get close to zero. So the prediction will be the same for all observations (prediction will be \(\overline{Y}\)). When the predictions are all the same, the computation for \(R^2\) is NA.

ridge_cv %>%

collect_metrics() %>%

ggplot(aes(x = penalty, y = mean, color = .metric)) +

geom_errorbar(aes(

ymin = mean - std_err,

ymax = mean + std_err),

alpha = 0.5) +

geom_line(size = 1.5) +

scale_x_log10()

The best model can be chosen using select_best().

best_rr <- select_best(ridge_cv, metric = "rmse")

best_rr## # A tibble: 1 × 2

## penalty .config

## <dbl> <chr>

## 1 0.791 Preprocessor1_Model25The Final Ridge Model

Using the \(\lambda\) value for the minimum MSE in the cross validation, we output the coefficients / model associated with the best \(\lambda\) for the ridge regression model. Ridge regression does not do variable selection.

finalize_workflow(ridge_wf %>% add_model(ridge_spec_tune), best_rr) %>%

fit(data = office_test) %>% tidy()## # A tibble: 31 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) 8.42 0.791

## 2 season -0.0327 0.791

## 3 episode 0.0383 0.791

## 4 andy 0.00211 0.791

## 5 angela 0.0233 0.791

## 6 darryl 0.0264 0.791

## 7 dwight 0.0523 0.791

## 8 jim 0.0407 0.791

## 9 kelly -0.0347 0.791

## 10 kevin 0.0371 0.791

## # … with 21 more rows14.11 R: Lasso Regularization

In this section, we’ll re-run the cross validation to find a value of \(\lambda\) which minimizes the cross validated MSE. To tune the lambda parameter, we need to start over with the model/engine specification so that the penalty is not fixed. Note that with Lasso, mixture = 1.

lasso_spec_tune <- linear_reg(mixture = 1, penalty = tune()) %>%

set_mode("regression") %>%

set_engine("glmnet")Next, a new workflow() which includes the new model/engine specification. Notice that we don’t need to set measure = 0 here because it was only the penalty term which we designated to tune().

lasso_grid <- grid_regular(penalty(range = c(-5, 5)), levels = 50)

lasso_wf <- workflow() %>%

add_recipe(office_rec)

lasso_fit <- lasso_wf %>%

add_model(lasso_spec_tune) %>%

fit(data = office_train)

# this is the line that tunes the model using cross validation

set.seed(2020)

lasso_cv <- tune_grid(

lasso_wf %>% add_model(lasso_spec_tune),

resamples = office_fold,

grid = lasso_grid

)## # A tibble: 50 × 7

## penalty .metric .estimator mean n std_err .config

## <dbl> <chr> <chr> <dbl> <int> <dbl> <chr>

## 1 0.00001 rmse standard 0.531 10 0.0497 Preprocessor1_Model01

## 2 0.0000160 rmse standard 0.531 10 0.0497 Preprocessor1_Model02

## 3 0.0000256 rmse standard 0.531 10 0.0497 Preprocessor1_Model03

## 4 0.0000409 rmse standard 0.531 10 0.0497 Preprocessor1_Model04

## 5 0.0000655 rmse standard 0.531 10 0.0497 Preprocessor1_Model05

## 6 0.000105 rmse standard 0.531 10 0.0497 Preprocessor1_Model06

## 7 0.000168 rmse standard 0.531 10 0.0497 Preprocessor1_Model07

## 8 0.000268 rmse standard 0.530 10 0.0497 Preprocessor1_Model08

## 9 0.000429 rmse standard 0.529 10 0.0497 Preprocessor1_Model09

## 10 0.000687 rmse standard 0.528 10 0.0496 Preprocessor1_Model10

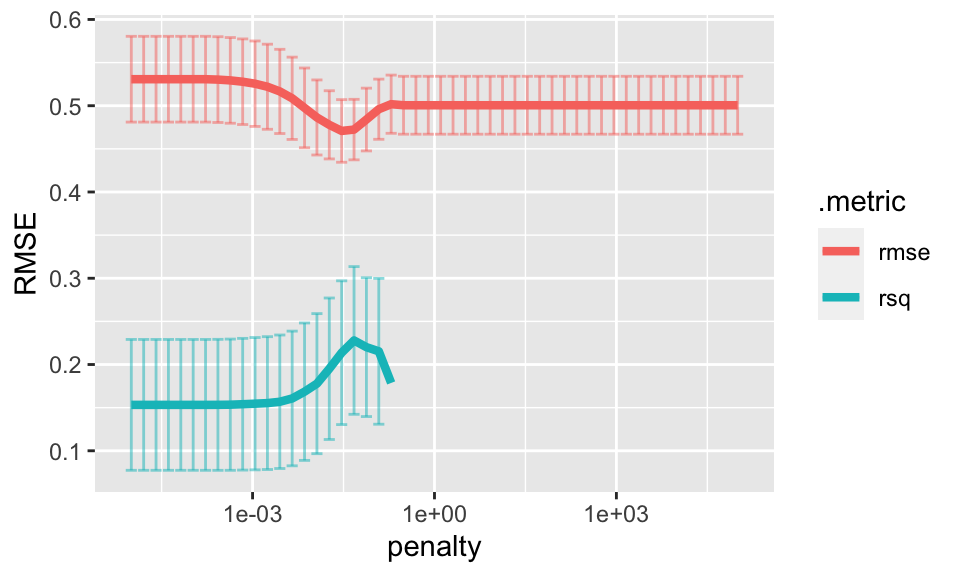

## # … with 40 more rowsInterestingly, as the penalty grows, the coefficients all get close to zero. So the prediction will be the same for all observations (prediction will be \(\overline{Y}\)). When the predictions are all the same, the computation for \(R^2\) is NA.

lasso_cv %>%

collect_metrics() %>%

ggplot(aes(x = penalty, y = mean, color = .metric)) +

geom_errorbar(aes(

ymin = mean - std_err,

ymax = mean + std_err),

alpha = 0.5) +

geom_line(size = 1.5) +

scale_x_log10() +

ylab("RMSE")

The best model can be chosen using select_best().

best_lasso <- select_best(lasso_cv, metric = "rmse")

best_lasso## # A tibble: 1 × 2

## penalty .config

## <dbl> <chr>

## 1 0.0295 Preprocessor1_Model18The Final Lasso Model

Using the \(\lambda\) value for the minimum MSE in the cross validation, we output the coefficients / model associated with the best \(\lambda\) for the ridge regression model. Lasso regularization DOES do variable selection. Note the large number of coefficients that are set to zero.

finalize_workflow(lasso_wf %>% add_model(lasso_spec_tune), best_lasso) %>%

fit(data = office_test) %>% tidy()## # A tibble: 31 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) 8.42 0.0295

## 2 season -0.112 0.0295

## 3 episode 0.115 0.0295

## 4 andy 0 0.0295

## 5 angela 0.00412 0.0295

## 6 darryl 0.0195 0.0295

## 7 dwight 0.0665 0.0295

## 8 jim 0.0902 0.0295

## 9 kelly -0.0518 0.0295

## 10 kevin 0.125 0.0295

## # … with 21 more rows